How to Create Speech‑to‑Text Software for Languages with Many Dialects

January 31, 2023

By Zuzana Ďaďová in Blog

English is the official language in 58 countries, 16% of the world's population speaks Chinese, Hindi is the third most spoken language in the world with more than 600 million speakers, and Spanish is the native language of 483 million people in more than 20 countries worldwide.

In the world, many languages are spoken across a vast geographical territory and, therefore, they introduce great diversity into their lexical, phonetic, and grammatical features.

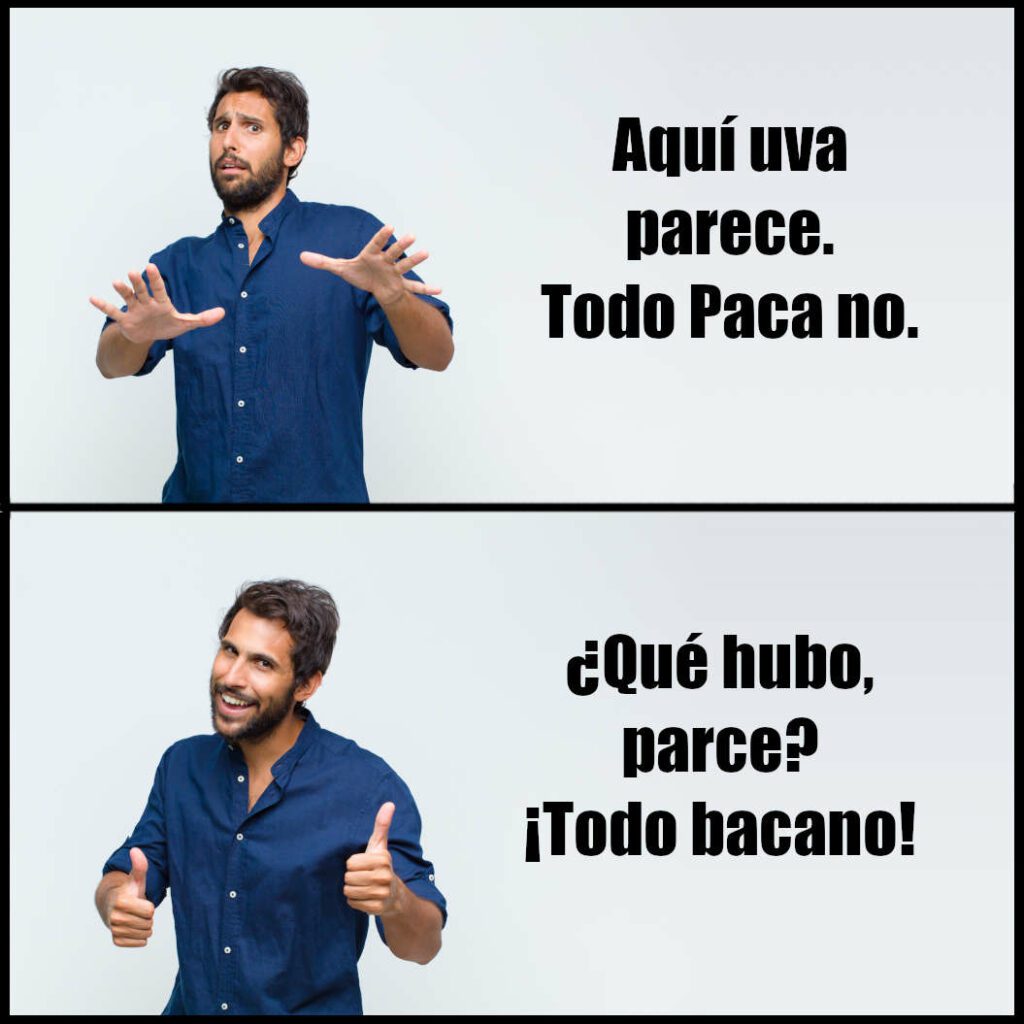

In Spanish alone, there are truly numerous ways of saying "estoy bien" or "¿dónde andas?" or simply asking "¿qué tal?" We can in no way compare such diversity with that of a country like Sweden or the Czech Republic with its 10 million speakers. This diversity never ceases to fascinate linguists and the speakers themselves. At the same time, having so many dialect differences is a relevant issue in the world of speech recognition as they present a challenge for speech-to-text engineers and scientists.

What Do Speech-to-Text Users Need?

Some prospective users may have doubts about how speech to text will work when transcribing their speech. Based on their experience with dialect incomprehension or awareness that their dialect differs markedly from the standard, some distrust of the speech recognition field may arise.

This problem is (comically) played out in the famous video about two Scotsmen entering an elevator run purely on speech recognition. When the door closes, they have to say the number of the floor they wish to go up to. Although one of them is very skeptical of voice recognition systems for Scottish English, they both try anyway and repeatedly utter "eleven" – in a Scottish accent, of course – in order to get the elevator moving. And they grow increasingly frustrated as the elevator's automated voice answers again and again, "I'm sorry, could you please repeat that?"

Although this is a comical sketch, speech-to-text engineers should keep in mind that situations like this do occur often. Companies and public bodies, as well as individual users, need speech transcription technologies to work as accurately as possible, regardless of the speaker's country of origin or, rather, regardless of their native dialect. It is, therefore, necessary to consider the possible dialects a language may have.

What Is a Dialect?

A dialect is a variety of a language shared by a community of speakers in a specific geographic territory, such as, for example, Algerian French, Venezuelan Spanish, or British English in England.

Why Are Some Languages So Varied and Have So Many Dialects?

Language is always a reflection of the society that speaks it. If a country is geographically large – as, for example, Russia – its culture, society, and inhabitants will be very varied and their ways of life different. Some will live more isolated, others in big cities, some in the east, others in the west, some well off and going to the best universities, and others without much social mobility. All this variety is reflected in the language. Not only in geographical dialects but also in social dialects (sociolects).

And what happens if, in addition, the language is spoken in several countries? As we see with English, French, and Spanish, dialectal variation increases even more. It can be reinforced by several factors: the political isolation of some countries, different implications of the countries in the global economy, differences in the language of the media in each country, or historical and cultural differences. The reasons are many. This diversity is relevant in many professional fields: language teaching, public administration, media, or, precisely, the field of speech technologies such as automatic speech-to-text transcription.

What Is Speech to Text?

Speech to text (also known as audio to text or voice to text) is speech recognition software that converts spoken language to text.

What Data Is Needed to Create Speech-to-Text Software?

There are three components needed in every training process. All of this data depend on the target language.

- Phonetic dictionary. This contains words and pronunciations according to the pronunciation rules of the language or dialect. Thanks to the dictionary, the system relates letters to specific sounds.

- Acoustic data. This is hundreds of hours of recordings in the target language with their respective transcriptions. These acoustic data sets represent the most important part of the data introduced in the training process. Thanks to them, the acoustic information is related to the written form of the language.

- Textual data. This consist of a huge collection of texts in the given language and are used by the model to calculate the probabilities of the occurrence of words and expressions and possible word sequences.

Now, if all this data is necessary to train, for example, Spanish speech-to-text conversion software, what pronunciation do we choose for the phonetic dictionary? And what variety of Spanish will be spoken in the recordings?

How to Cover Linguistic Variation in a Speech-to-Text Model

Unlike small languages, here we not only consider the accessibility and affordability of the data but also make linguistic decisions based on knowledge of the dialectal situation of the given language.

There are two main approaches:

- Create a model (or several models) from speech to uni-dialectal text (i.e., for a specific dialect or macro-dialect). Let us continue with the example of Spanish. Suppose we decide to create speech to text for Mexican Spanish. In that case, the phonetic dictionary will specify the typical pronunciation for this variety of Spanish, and the audio will be recorded in Mexican Spanish. This approach has two clear advantages: it is specific (and, therefore, suitable for automatically transcribing Mexican Spanish) and needs less (but specific) data to cover a particular Spanish-speaking region. But beware! This approach has a clear disadvantage: this Mexican model will have worse results when trying to use it outside the region, for example, for Colombian, Chilean, or the Spanish from Spain.

- Create a multi-dialectal model. If we opt for the more general model covering Spanish from the Americas, Europe, and Africa, the data used will have to be more varied than that of the uni-dialectal model. That is, the dictionary will contain more variants, and the acoustic data sets will come from several dialects, each of which is very distinct and represents a dialectal macro group (European, Caribbean, Andean, North American, Rioplatense, etc.). Of course, such a model will be more expensive because of the required amount of diverse data, but it will be more robust and general, i.e., useful for clients from any Spanish-speaking country.

Conclusion

The diversity of a language can be a major challenge for speech to text, but it is a manageable problem. The two approaches mentioned above – uni-dialectal and multi-dialectal models – have certain advantages and disadvantages. The choice of one or the other depends on the client's intended use, data accessibility, and the specific situation of the language in question.

As for Spanish, when creating the sixth generation of Spanish Speech to Text at Phonexia, we chose a multi-dialectal model. This new generation has not only received numerous technological improvements but also used data from several dialects (Mexican, Caribbean, Andean, European) during its development. Therefore, this model demonstrates very satisfactory results of the automatic transcription of various dialects of Spanish, a fact that is confirmed by several Hispanic-American clients.