What Is Forensic Voice Comparison?

Imagine that you are investigating a crime. You have a recording of a threatening phone call along with other evidence (fingerprints, DNA, etc.). You also have one or several suspects. You need to analyze the voice on the recording and the voices of the suspects, determine how likely it is that the unknown speaker recording was produced by one of the suspects, and present the strength of the evidence to a court.

With the widespread use of mobile phones and increasingly common practices of voice recording, voice evidence has become a part of the evidence in many criminal investigations.

Forensic voice comparison (also referred to as forensic speaker comparison, forensic speaker recognition, and forensic speaker identification), or FVC, is the comparison of one or more voice recordings of an unknown speaker to one or more recordings of a known speaker made by a forensic practitioner in order to help the court to identify the criminal.

A person’s voice is unique and contains a lot of information, such as where the person grew up and learned to speak, their race and nationality, biological gender, height, and other unique identifiers due to the genuine shape, size, and usage of the person’s vocal organs. You can read more about this in our Voice Biometrics Guide.

Therefore, analyzing the unique characteristics of a person’s voice and comparing them to the characteristics of the suspect’s voice can strongly support one of the hypotheses: that the voices were produced by the same speaker or that they belong to different speakers.

The problem is that the recordings for forensic voice analysis and comparison often have poor quality – loud background noise, multiple speakers, speechless fragments, reverberation, lossy compression due to channel frequency limitation, etc. Unlike fingerprints, the human voice is prone to change easily, for example, due to stress or health conditions, intoxication, or simply the speaker’s intention to disguise themselves.

Therefore, forensic voice comparison is not a trivial task. It usually involves trained and highly experienced forensic practitioners and state-of-the-art technologies.

The Difference Between Forensic Voice Comparison and Speaker Identification

Both forensic voice comparison and speaker identification consist of two main steps:

- Voice features extraction from the questioned and referenced recordings

- Comparison of these features

However, there are significant differences between speaker identification and forensic voice comparison.

Comparison Conditions

In forensics, the quality of the recording in question could be much poorer due to background noise, telephone-band frequency limitations, reverberation, long distance from the microphone (for example, if the microphone is covert), and other factors.

On the other hand, the speaker in a call center is usually cooperative and can be asked to say a particular phrase in a quiet location (while in forensics, they might not even be aware that they are being recorded).

The questioned recordings might be too short or the time interval between the questioned and suspect recordings might be too large.

Moreover, there might be a mismatch between the recording conditions of questioned- and known-speaker recordings.

Human Involvement

Speaker Identification (SID) systems in call centers are (in most cases) fully automated, and their task is to accept or reject a caller without human involvement. In forensics, human-supervised automatic methods or combined methods are usually used, and automatic voice comparison is just a part of voice analysis.

Results Representation

The result of voice comparison in SID systems in call centers is usually a binary decision (acceptance or rejection is based on a voice biometric system’s acceptance threshold and often combined with other authentication factors).

In forensic cases, the result of voice comparison is used along with other evidence (fingerprints, DNA, witnesses’ testimonies, etc.) to help the court to make a decision.

Thus, it influences the court’s decision and, as a consequence, the fate of people.

Therefore, the result of forensic voice comparison (FVC) should be a quantitative measurement of the strength of voice evidence that would tip the scales of justice to one of the two hypotheses.

That is why forensics voice comparison is more complicated and profound than speaker identification usually applied in a call center.

Forensic Voice Comparison Techniques

There are four basic approaches to forensic voice comparison: auditory, spectrographic, acoustic-phonetic, and automatic.

What differs one approach from another are the methods for extracting information from voice recordings, methods of comparison, and the presentation of the results.

In forensics, a combination of techniques is often used to analyze voice recordings from all possible perspectives.

The first three approaches can be merged into the Auditory-Acoustic Framework (AAF). The automatic approach is also called Automatic Speaker Recognition (ASR), Automatic Speaker Comparison, and Automatic Speaker Identification.

Auditory Approach

In an auditory approach, a forensic practitioner trained in auditory phonetics listens to the questioned- and known-speaker recordings. They note the similarities in the sounds they would expect if the sounds were produced by the same speaker and the differences they would expect if they were not.

The audible differences could be dialectal – for example, replacing the “th” sound with either “t” or “d” in Irish English.

They could also be related to voice quality – creaky or breathy, harsh or soft. Speech impediments are also considered in the auditory approach (lisping, pronunciation of “r” or “l” as “w”, stuttering, etc.).

An untrained listener can recognize some differences, but an expert is able to notice and note much more subtle voice feature discrepancies.

The other voice properties to compare may include vocabulary choice, pronunciation of particular words and phrases, segmental pronunciation, intonation patterns, stress patterns, speaking rate, and voice source properties.

Most practitioners only listen to known- and questioned-speaker recordings. Some experts also listen to a set of foil speakers – speakers similar to the known- and questioned-speakers in terms of age, gender, language, and accent spoken, recorded under similar conditions.

Foil speakers can be considered members of the relevant population, but their number is too small for a representative sample.

The practitioner carefully documents all the voice similarities and differences they consider important and then presents them in court.

In the auditory approach, the results have a qualitative nature, and statistical models are not used.

In general, using an auditory approach alone is quite uncommon.

Many practitioners use an auditory-acoustic-phonetic approach.

They measure acoustic-phonetic values and represent them in tables or plots. Then, these plots of values of questioned-, known- and foil speakers are compared.

The features usually analyzed in the auditory-acoustic-phonetic approach include:

- Voice quality

- Intonation

- Pitch

- Articulation rate

- Rhythm

- A large set of consonantal and vowel features

- Speech impediments

- Linguistic features (discourse markers, lexical choices, call opening habits, etc.)

- Non-linguistic features (breathing, throat-clearing habits, etc.)

Spectrographic Approach

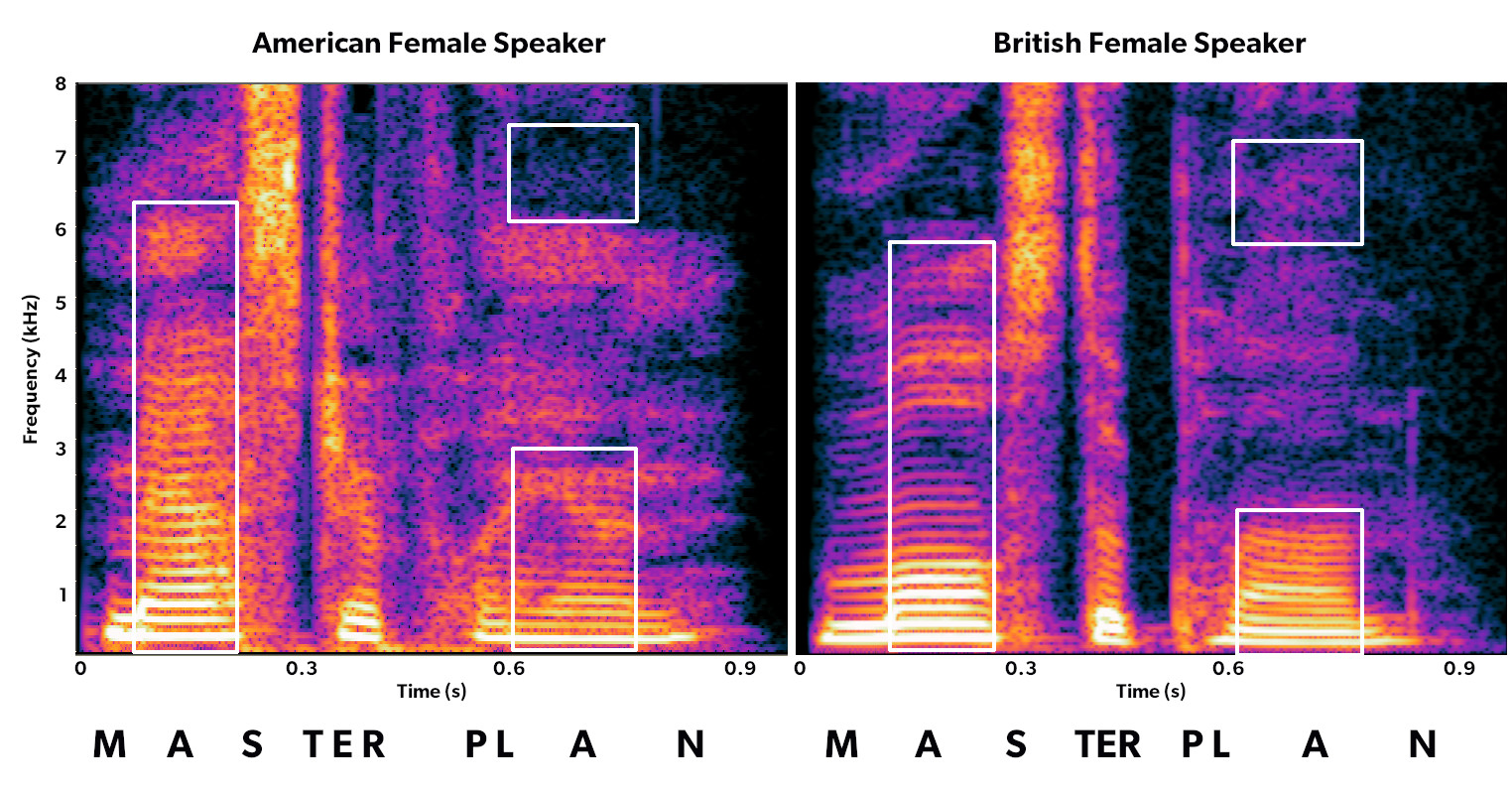

This approach involves making a spectrogram of a word or a phrase from the questioned speaker recording and also making a spectrogram of the same word or a phrase from the suspect speakers (and sometimes foil speakers) recordings.

Known speakers and foil speakers are asked to say the word or phrase in the same manner as the unknown speaker by repeating after a model or reading the text.

The model is a person who speaks the required phrases in the same fashion as they were said in the recordings. Multiple phrases and multiple repetitions of the same phrase are collected in order to evaluate intra-speaker variability.

The spectrograms are then analyzed by the examiner, who compares the same sounds in the same words (comparing the same sounds in different words is usually not recommended).

The above spectrograms show the differences between an American and British female speaker saying the word “master plan” (audio taken from Oxford Learner’s Dictionaries). Notice the brightness differences in the sound “a” marked by the white rectangles. The brighter the image, the more sound energy there is at a given time and frequency.

The spectral characteristics can reveal pronunciation features typical of specific varieties of a language, such as different vowel phonemes (compare typical American and British pronunciations of the first syllable in “master”), as well as differences in pronunciation of the same phoneme (the missing energy in the higher frequencies of the American speaker’s rendition of the word “plan” reveals her nasalized pronunciation before the final “n” sound).

The spectrographic analysis is usually combined with listening to the recordings, which results in the auditory-spectrographic approach.

Like in auditory methods, the conclusions in the spectrographic approach are subjective and experience-based rather than based on quantitative measurements and databases.

In Europe and the USA, the spectrographic approach is considered rather anachronistic. In China, it is recommended in the guidelines issued by the Ministry of Justice and the Ministry of Public Security.

Acoustic-Phonetic Approach

The acoustic-phonetic approach compares the quantitative measurements of phonetic units’ characteristics in both recordings.

Usually, a practitioner identifies the comparable phonetic units (phonemes, allophones, or the sequences of allophones) of the questioned- and known-speaker’s recordings and measures and compares their parameters.

Typically, the parameters measured are formant frequencies, fundamental frequencies, and voice-onset time.

If a sufficient number of the same unit is found in the questioned- and known-speaker’s recordings, then its acoustic properties are measured in all recordings and subjected to statistical analysis. This is called the acoustic-phonetic statistical approach.

Acoustic measurements are usually made and compared using software.

However, human supervision is required when selecting units for measurement and setting algorithms.

Usually, it involves a great investment of human labor, especially if the method is evaluated before presenting the results in court.

The results of the acoustic-phonetic statistical methods fit into the likelihood ratio paradigm. However, their implementation requires a lot of human labor, and the performance is usually poorer than automatic methods.

Automatic Approach

Automatic voice comparison systems – known also as Speaker Identification (SID) or Automatic Speaker Recognition (ASR) – were introduced into forensics in the 2000s when computer systems for voice recognition evolved considerably.

Automatic voice comparison, like an acoustic-phonetic approach, is based on the quantitative measurement of acoustic properties of speech. The measurement results are then used as inputs for statistical models.

Due to fast signal processing and statistical modeling in ASR systems, thousands of test pairs can be compared very quickly.

In automatic voice comparison systems, spectral measurements are usually made for every short segment, generally from 10 to 30 ms long.

These measurements are then transformed into a mathematical model. The right model is found using statistical or artificial intelligence approaches.

Current state-of-the-art ASR systems use Deep Neural Networks (DNN) in order to train the system to create a speaker model (a voiceprint). DNN-based systems are significantly more accurate and faster (they are usually trained on a large set of speaker recordings).

The voice models (voiceprints) of the questioned and known speakers are then compared to each other and to a population set model in order to evaluate the ratio between the likelihoods of two hypotheses:

- The two compared voice recordings were produced by the same speaker

- The two compared voice recordings were produced by different speakers

Likelihood Ratio (the Result of Automatic Forensic Voice Comparison)

Typically, automatic voice comparison results in the Likelihood Ratio (LR) of two hypotheses.

The LR reflects how much a particular piece of evidence is more likely under one hypothesis than another.

So, LR = n means that the observed properties of the voice on the questioned-speaker’s recording (the evidence) are n times more likely had this recording been produced by the known speaker (the same-speaker hypothesis) than had it been produced by some other speaker randomly selected from the relevant population (the different-speaker hypothesis).

In other words, the value of the likelihood ratio is a numeric expression of the strength of the evidence regarding the competing hypotheses.

For mathematical convenience, the result is often presented in the form of Log Likelihood Ratio (LLR) or logarithm (usually decimal or natural) of the likelihood ratio, because the logarithm function is symmetrical about zero:

![]()

Thus, high positive log likelihoods mean the evidence is much more likely to occur under the same-speaker hypothesis than under the different-speaker hypothesis.

Vice versa, high negative log likelihoods mean that the evidence is much more likely to occur under the different-speaker hypothesis than under the same-speaker one. A log likelihood ratio of 0 (or likelihood ratio of 1) means that the evidence cannot support any of the hypotheses.

The hypothesis in the numerator reflects the similarity of the two voices and the one in the denominator, their typicality across the relevant population.

This results representation fits perfectly with the widely accepted Bayesian paradigm of expressing the strength of evidence instead of a binary decision.

Bayesian Paradigm

Considering similarity only is not enough.

Suppose the questioned-speaker voice is very similar to the known-speaker voice but also very typical. In such a case, it could also be similar to the voice of some other speaker randomly selected from the relevant population.

On the other hand, if the questioned-speaker and known-speaker voices have very similar features, which are atypical in the relevant population, then it is very unlikely that the voice randomly selected from the relevant population would be equally or more similar to the questioned-speaker voice.

Likelihood ratio reflects this relation:

- More similarity and less typicality lead to a greater likelihood ratio and stronger support of the same-speaker hypothesis.

- Less similarity and more typicality lead to an LR closer to zero and stronger support of the different-speaker hypothesis. An LR close to 1 means that the evidence cannot strongly support any of the two hypotheses.

So, what matters is not the absolute value of the likelihood ratio but how far it is from 1, which reflects the strength of the evidence.

Why is the expression of the strength of evidence as the likelihood ratio of two hypotheses more logical?

The court makes a decision based on multiple pieces of evidence (voice, fingerprints, DNA, etc.).

Moreover, the court might have a prior belief about the case, at least based on the presumption of innocence. The result of voice comparison will influence the prior belief, but what is the mechanism of this influence?

If voice comparison results in a binary decision – “yes, the voices belong to the same person” or “the voices belong to different people” – it basically replaces the verdict of the court. Analyses of other evidence might conflict with voice comparison results, and, in this case, the trier of fact will have to evaluate the weight of every other contribution subjectively.

What the trier of facts requires from a forensic practitioner is the strength of voice evidence.

If the likelihood ratio is n, the given evidence is n times more likely to happen under the same-speaker hypothesis than under the different-speaker hypothesis.

Therefore, if the trier of fact had considered the same-speaker hypothesis, for example, ten times more likely than the different-speaker hypothesis before, the new evidence (i.e., voice comparison) multiplies this belief by n:

prior belief × n = posterior belief

The Bayesian paradigm makes it possible to objectively evaluate the posterior likelihood ratio considering the strength of particular evidence.

In other words, it delegates the right to make judgments to the court, allowing it to lean on the forensic expert’s conclusions and take into account other evidence.

The Pros and Cons of the Forensic Voice Comparison Approaches

In general, the auditory, acoustic-phonetic, and spectrographic methods are largely subjective (dependent on the training and experience of forensic practitioners), language-dependent, and time-consuming.

According to the annual proficiency testing introduced in many EU forensic voice comparison laboratories from 2017, the precision of the results when using these methods can vary significantly.

Automatic methods are considerably more objective in comparison to AAF.

Moreover, as Methodological Guidelines for Best Practice in Forensic Semiautomatic and Automatic Speaker Recognition were introduced in 2015, automatic methods could be considered as universal methodology.

Also, due to high speed and implementation standards, the SID methods are easily testable under various conditions and, therefore, it is possible to estimate their efficiency and outline their limitations.

Nevertheless, ASR systems work well under the conditions rarely met in forensics – relatively long recordings of good quality and the matching recording conditions of the questioned- and known-speaker’s recordings.

Under real conditions, these systems still produce errors, but the results can be enhanced greatly with calibration and channel-compensation techniques.

A global INTERPOL survey on the use of speaker identification by law enforcement agencies carried out in 2015 showed (based on 91 answers from 69 countries) the growing popularity of the human-supervised automated methods of forensic voice analysis compared to the previous 2011 survey.

The human-supervised automatic approach was the most popular in North America, the auditory-acoustic-phonetic approach was the most popular in Europe, and the spectrographic/auditory-spectrographic approach was the most popular in Africa and Central America.

Forensic Voice Comparison Challenges

Forensic voice comparison is more challenging than how it is sometimes portrayed in movies.

Unlike speaker identification in a call center, the recording conditions are usually poor, and there is often a mismatch in channels and recording conditions between questioned- and known-speaker’s recordings.

The factors influencing system performance can be classified as extrinsic or intrinsic.

Extrinsic Factors

The extrinsic (technical) factors relate to recording conditions and transmission channels of the questioned- and known-speaker’s recordings.

Recording Conditions

What might affect the quality of the recordings?

The speaker could be far from the microphone, and they might talk quietly (or too loudly – in this case, the highest amplitude parts of the sound are clipped and the signal becomes fuzzy).

Also, there might be a loud background noise (traffic, music, machinery) partially muting the speaker’s signal (a low signal-to-noise ratio).

In some cases, multiple speakers are talking at once (babble), and a recording made in a small room with hard walls could cause strong reverberation (echo effect).

The other challenging factor could be the duration of the recordings – the criminal’s voice recording (sometimes the suspect’s voice recording as well) could be very short. Besides, in some cases, the time interval between the criminal and suspect recordings could be too large (many years apart).

Channel Effects

The other factors that affect the quality of the recordings are so-called channel effects (i.e., the voice transmission and storage methods).

Landlines, mobile phones, and Voice-Over-Internet Protocols (VoIP) such as Skype, WhatsApp and others have different bandpasses (frequency amplitudes) and distort frequencies close to the ends of those bandpasses.

In mobile telephony, the signal is transmitted between the phone and the base. Therefore, the signal quality is affected by the buildings or other objects between the handset and the nearest base. Also, the signal is transmitted in small portions (packets) one after another, and, sometimes, whole packets might be lost.

Mobile systems use compression and decompression algorithms (codecs) to reduce the space needed for storage, which results in the loss of some of the information that would be useful for forensic comparison.

VoIPs are generally similar to mobile telephony and use lossy codecs as well.

Additionally, known-speaker and questioned-speaker’s recordings often mismatch in channels – a problem that significantly affects the accuracy of voice comparison and, therefore, should also be addressed.

Intrinsic Factors

Apart from the extrinsic factors mentioned above, there are also intrinsic (speaker) factors that can greatly affect the results of FVC.

These factors could be divided into intra-speaker variability (differences across speakers) and inter-speaker variability (differences within a speaker).

For instance, speakers respond to similar condition changes in different ways – some of them will not vary greatly in terms of their voice and some will show a huge variation. This might affect the comparison results if, for example, one recording is a phone call with a ransom demand and the other is a police interview.

Moreover, samples of the same speaker can vary greatly – the speaker could be ill, stressed, under the influence of alcohol or other drugs, or the suspect might simply be uncooperative and try to disguise their voice.

However, in automatic and semi-automatic methods, the channel effects and mismatch in recording conditions can be calibrated. As a result, the impact of a mismatch can be reduced.

There is also the challenge of the relevant population, as three sets of recordings are needed for automatic speaker recognition:

- A questioned recording

- A set of the suspect’s recordings

- A set of population recordings

The population recordings should be as close to the suspect as possible regarding language, accent, gender, race, age, etc.

Subsets of recordings from the relevant population are also needed for system calibration, validation, and evaluation of the results.

In some forensic cases, a reference population can be quite specific – for example, speakers of a rare dialect. Therefore, collecting a relevant population can be a very challenging task.

Using Forensic Voice Comparison in Court as Evidence

Voice evidence in forensics is increasingly common. Only rarely recordings from a smartphone or another device are not mentioned as evidence.

Consequently, demand for voice comparison and analysis in forensics is increasing along with the number of voice recordings to compare.

Often a combination of approaches is used to analyze voice evidence from all possible perspectives.

As was mentioned above, the main approaches are auditory, acoustic-phonetic, spectrographic, and automatic.

Paradigm Shift

In the 1990s, a new paradigm – “Bayesian paradigm” – was introduced in DNA testing, and it gradually started in other branches of forensic science, including forensic voice comparison.

As was described above, within this paradigm, the forensic voice comparison result – likelihood ratio – is quantitative and probabilistic, based on statistical analysis, and takes into account not only the similarity of the voices to be compared but also their typicality across the reference population.

The result is the ratio of two probabilities: the probability of the evidence under the prosecutor hypothesis (that the questioned- and known-speaker’s recordings were produced by the same person) and the probability of the evidence under the defense hypothesis (that different people produced them).

Unlike binary decisions, this presentation of the results provides the court with a simple and logical procedure for considering the strength of the voice evidence and modifying their prior belief according to this evidence.

Methodological Recommendations, Conclusions, and Surveys

In 2015, the European Network of Forensic Science Institutes (ENFSI), with the support of the European Commission, introduced Methodological Guidelines for Best Practice in Forensic Semiautomatic and Automatic Speaker Recognition.

The guidelines contain a detailed methodology for:

- Interpreting recordings as scientific evidence

- Calculating a likelihood ratio

- Using automatic methods under operating conditions

- Measuring the performance of a chosen method for evaluation and validation purposes

- Combining the ASR outputs with the outputs of other methods for presenting the results to the court

According to the guidelines: “calibrated likelihood ratios are the most desirable strength-of-evidence statements, and they are most compatible with the Bayesian interpretation framework. Calibrated likelihood ratios have a direct interpretation that can be reported and explained to the court. For example, if the likelihood ratio of a case has a value of 100, it is 100 times more likely to observe speech evidence supporting the H0 hypothesis than the H1 hypothesis given the questioned-speaker’s and suspected-speaker’s recordings, as well as the relevant population database.”

Evaluation and validation methods have also been one of the central issues of forensic science.

The important question is: How can the system be proven to be good enough for its output to be used in court, given the results of its validation in a particular case’s conditions?

In 2017, G. Morrison and W. Thompson analyzed how courts evaluated the admissibility of a new generation of FVC testimony and described the potentially admissible FVC analysis.

They concluded that the necessary and sufficient criterion of admissibility is an empirical demonstration of the system’s degree of validity and reliability.

That means that the used method of FVC should be tested under conditions as close to those of a particular case as possible using the relevant population of speech recordings.

In 2021, Consensus on Validation of Forensic Voice Comparison – the document developed by a group of experts with knowledge and experience in validating FVC systems – was published.

It contains recommendations for forensic speech laboratories on the information that should be presented to the court, the calibration of a system, validation and evaluation processes, and their results that should be presented to the court.

Taking into account the rapid change in the forensic field and the fast development of automatic voice comparison systems, in 2019, a new survey related to current practices in forensic voice comparison was undertaken by 39 laboratories and individual practitioners across 23 countries.

The survey showed that:

- 2% of respondents used automatic systems (ASR) compared to 17% in 2011

- 1% of ASR users calibrated their systems

- 7% of ASR users had access to reference populations

- 3% of ASR users indicated that they provided numerical LRs at some point in their case reports (35.7% did not)

As the numbers above show, forensic voice comparison is in the midst of a paradigm shift. However, experts working within the Bayesian framework are still in the minority.

How Accurate Is Forensic Voice Comparison?

Forensic experts use different tools to provide the court with the most accurate results of forensic analysis. Some forensic analysis methods are based on a practitioner's experience and qualification, others are on up-to-date technologies and automatic systems.

The accuracy of the results is a crucial question. But the same method can show different levels of accuracy under different conditions.

Voice comparison in forensics is very different from voice comparison in call centers.

In forensics, it is common to have poor recording conditions and a mismatch in recording conditions and speaking styles between known – and questioned-speaker’s recordings – the factors that significantly affect accuracy.

Plus, all these factors can be highly variable from case to case. Thus, it is strongly recommended that the applied FVC methods should be validated under conditions close to those of the current case before their results are presented to the courts.

Accuracy and Precision

In forensic science, we talk about two different concepts of validity and reliability.

Validity is a synonym for accuracy and reliability is a synonym for precision. The level of accuracy/validity of a system reflects how close the mean of the results is to the true value (reflection of systematic errors).

The level of precision/reliability reflects how close the random values are to each other (reflection of random errors).

Within the AAF, the accuracy and precision of the results depend on the practitioner’s experience and knowledge.

Within the Bayesian framework, accuracy and precision can be evaluated using statistical methods and relevant populations.

To assess the accuracy and precision of the output, the system can be tested on a large number of recording pairs (test data) where the same or different origin of each pair is known.

Let’s explore the validation process in more detail.

Validation Process

Validation means that a system is tested under casework conditions. A system could be acoustic-phonetic, auditory, or automatic, including any choices made by a human (the practitioner is part of the system).

The system being validated is treated like a black box. The input and output test data is basically pairs of recordings where it is known for each pair whether it is produced by the same speaker or by different speakers.

The test data should meet the following requirements:

- The speaker should belong to the relevant population (in terms of gender, race, language, and accent)

- One member of a test pair should reflect the recording conditions of the known-speaker’s recording and another member – the questioned-speaker’s recording

The relevant population, as well as recording conditions, can vary greatly from case to case.

Therefore, the system should be tested under the conditions reflecting those of the current case, and the results of the validation might not be applicable for another case.

The result of the evaluation is the error rate in the case of the AAF or Likelihood Ratio Cost (Cllr) in the case of automatic methods.

Cllr is basically the mean of the penalty values for each test pair. A penalty value is low if the (log) likelihood ratio strongly supports the hypothesis that is correct or, vice versa, is high if it strongly supports the incorrect hypothesis.

The better the system’s performance, the lower the error rate or Cllr.

There are, however, two important questions:

- Is the population set representative enough of the relevant population?

- Are the recording conditions of the test data similar enough to those of the current case?

The choice of test data must satisfy the tester of the system and the court while, to a high degree, this choice has been a subjective decision so far.

However, there is a lot of research on the effects of the changes in the data set on the system’s performance.

In any way, with increasing pressure on forensic laboratories regarding the validation of their performance, there is a clear shift in forensic analysis: from binary answers to Bayesian thinking, from quantitative results to likelihood ratio, from subjectivity and experience-based results to empirical validation under real conditions.

In 2016 in the USA, the President’s Council of Advisors on Science and Technology issued its Report on Forensic Science in Criminal Courts: Ensuring Scientific Validity of Feature-Comparison Methods. It recommends: “where there are not adequate empirical studies and/or statistical models to provide meaningful information about the accuracy of a forensic feature-comparison method, DOJ attorneys and examiners should not offer testimony based on the method.”

In the same year, an effective validation instrument was introduced – the method called Forensic_eval_01.

What Is Forensic_Eval_01?

Developed by Geoffrey Morrison and Ewald Enzinger, the Multi-laboratory evaluation of forensic voice comparison systems under conditions reflecting those of a real forensic case, or Forensic_eval_01, was first introduced in the Speech Communication journal in 2016.

The authors also collected and released a set of training and test data representative of the relevant population and reflecting the conditions of this case. This set can be used by any forensic voice comparison laboratory and research laboratory to train and test their forensic systems.

Forensic_eval_01 allows the conducting of objective testing of the existing forensic voice comparison systems. It can also be used for research, for example, on how the size and design of the reference population affect the accuracy of the results.

Examples of Systems Validated by Forensic_Eval_01

The results of the validation of several forensic voice comparison systems have been published in the special issue of the Speech Communication journal.

Validation of BatVox 4.1 with different operational parameters was conducted at the Netherlands Forensic Institute in 2016. The analysis showed that a larger population set correlated with better results.

Also, Nuance Forensics 9.2 and Nuance Forensics 11.1, which include some Deep Neural Network (DNN) functionalities, were validated using forensic_eval_01. The results showed that the use of DNN technology led to improved results. The size of the reference population, which was used for the purpose of calibration, did not matter.

Forensic voice comparison systems developed by Phonexia were tested in 2019 and 2021. The tests showed that Phonexia Voice Inspector powered by Phonexia Deep Embeddings™ – the world’s first commercially available speaker identification technology based entirely on Deep Neural Networks – outperformed the other voice comparison systems and represented the most accurate forensic comparison system available on the market.

As was mentioned before, Morrison and his colleagues collected a set of training and test data for forensic_eval_01 purposes. The set contains voice recordings of 500+ Australian-English speakers and is available for research and testing purposes at databases.forensic-voice-comparison.net.

Of course, an increasing number of voice-recording data sets can be expected to emerge in the future. However, the collection of the population sets remains one of the most costly and time-consuming issues so far.

Frequently Asked Questions About Forensic Voice Comparison

Is It Possible to Fool a Forensic Voice Comparison?

No voice comparison method is 100% reliable.

For example, a 2021 evaluation of the latest generation of Phonexia Speaker Identification technology on the forensic_eval_01 validation system showed an extremely good result of a 1.2% Equal Error Rate after calibration. That said, the accuracy is still not 100%.

A criminal might try to use low-quality channels, input background noise, or more than one voice to fool the system. Of course, these factors may cause a decrease in accuracy.

However, as technologies are constantly evolving, it is an extremely difficult task to deliberately fool a voice biometric system powered by up-to-date technologies.

Plus, the accuracy can be greatly enhanced with the use of noise reduction technologies, audio source profiles, and calibration.

What Do LLR and LR Mean?

LR stands for a likelihood ratio, the result of a two-model comparison statistical test. It returns a number that expresses how many times more likely the evidence is under one model than the other.

In the forensic case, one model is the same-speaker hypothesis and the other one is the different-speaker hypothesis, and the evidence is the questioned voice recording.

LR = n means that the given voice evidence is n times more likely under the same-speaker hypothesis than under the different-speaker hypothesis.

LR meets numbers in the interval (0; +inf).

For mathematical convenience, the logarithmic function of LR with the base e (natural logarithm) or 10 (decimal logarithm) is often used. The abbreviation LLR stands for a log-likelihood ratio. LLR is symmetrical about zero and its values lie in the interval (-inf; +inf).

What Are the Pros and Cons of Automatic Voice Comparison?

The strengths of automatic voice comparison include the following:

- It takes into account not only the similarity of the voice but also its typicality, which better reflects reality

- It results in a quantitative measurement of the strength of the evidence (likelihood ratio), which fits into the new forensic paradigm

- It is less subjective and less dependent on human skills and experience

- It is easily repeatable, allowing the method to be validated in every particular case under the case’s conditions

Nevertheless, automatic voice comparison systems can still produce errors. That is why testing a forensic voice comparison system under the conditions reflecting those of the particular case is highly recommended.

How to Evaluate the Accuracy of a Forensic Voice Comparison Method?

The accuracy of a forensic comparison method can be evaluated using a large number of test pairs of recordings where it is known for each pair whether the recordings belong to the same speaker or to different speakers. The evaluated method is used to compare the test pairs, and then the result is compared with the known input.

It is important to note that any measurement of accuracy depends on both the test data and the method itself.

For meaningful results, the test data should be representative of the relevant population (in terms of language, accent, gender, age, etc.).

Moreover, the recording conditions of the test samples should match the recording conditions of the known- and questioned-speaker’s recordings in terms of speaking style and the quality and duration of the recordings. Only then will the results of the test show the system’s expected accuracy reliably under the case conditions.

What Does Calibration of the System Mean?

The raw scores received through the process of likelihood ratio calculation must be calibrated for the correct interpretation. The scores are calibrated with the use of calibration sets from a population database.

In a well-calibrated voice comparison system, the result LR=1000 (a likelihood ratio of 1000) means that it is 1000 times more likely to obtain the given evidence if the questioned speaker’s recording was produced by the known speaker (the suspect). This information can then be taken to court to re-evaluate any other pieces of evidence and prior odds that the judge may already have.

Calibration can also eliminate the effect of speech duration, channel, and speech quality.

In Phonexia Voice Inspector and other forensic voice comparison products, an Audio Source Profile is used for score calibration.

An Audio Source Profile is a representation of the speech source, e.g., the device, acoustic channel, distance from microphone, language, gender, etc. It contains all the information needed for any calibration and mean normalization.

During the comparison of speaker voiceprints, both sides can be calibrated by either the same profile (in case they come from the same source) or by two different profiles (in case they have a different source).

What Is a Relevant Population?

For performing forensic voice comparison according to the Bayesian paradigm, three sets of recordings are needed:

- A questioned recording

- A set of suspect’s (known) recordings

- A set of population recordings

The Bayesian model takes into account not only similarity but also the typicality of the voice features extracted from the questioned recording.

Therefore, to estimate similarity, the questioned recording is compared to the known recording(s).

Then, to estimate typicality, the questioned- or suspect- speaker’s recordings are compared to the population recordings.

For that reason, the speakers in the population set must be as close as possible to the suspect – the same gender, race, nationality, age group, speaking the same language, having the same accent, etc.

In other words, the speakers must belong to the relevant population.

It might be problematic to get a large enough number of recordings for the relevant population, so it is possible to use some more general sets in the initial stages of the investigation (e.g., English-speaking males) and then collect a less but more targeted set of recordings to get stronger proof for the court.

Forensic Voice Comparison Software

Voice Inspector by Phonexia utilizes the technology called Deep Embeddings™, which uses deep neural networks and offers the most accurate speaker identification technology available on the market.

Designed specifically for forensic experts, trusted and evaluated by forensic experts from Bundeskriminalamt and the Zurich Forensic Science Institute, Phonexia Voice Inspector performs language-independent, unbiased, and highly accurate forensic voice comparisons according to the ENFSI guidelines to support investigations and provide evidence in court.

The key features of Phonexia Voice Inspector:

- Accurate, unbiased, language-independent voice analysis by Automatic Speaker Recognition technology

- Easy-to-use graphical user interface (GUI)

- Software can be easily adjusted for use in any country via a population database

- Includes an SID evaluator that allows the software to be easily tested

- Automatic detection of the audio parts containing speech and the recordings unsuitable for voice analysis due to high levels of noise

- Built-in speaker diarization

- Phoneme recognition

- Spectrogram

Phonexia Voice Inspector is not meant to replace the work of forensic experts. Its purpose is to support expert-level decisions with automated methods.

Forensic Voice Comparison Glossary

- Acoustic Analysis is a method of voice comparison where a practitioner measures some acoustic properties of the voice (fundamental frequency and formant frequencies, also voice onset time, fricative spectra, nasal spectra, voice source properties, speaking rate, etc.) in the questioned- and known-speaker’s recordings, and compares them using plots and graphs in order to determine how much more these voice properties support the same-speaker hypothesis than the different-speaker hypothesis.

- Auditory Analysis is a method of voice comparison where a practitioner listens to the questioned- and known-speaker’s recordings and compares the voice properties (vocabulary choice, pronunciation of particular words and phrases, segmental pronunciation, intonation patterns, stress patterns, speaking rate, etc.) to determine how much more likely it is that these recordings belong to the same speaker than to different speakers.

- Automatic Forensic Voice Comparison is a method of Forensic Voice Comparison that is based on the quantitative measurement of acoustic properties of the human voice using state-of-the-art voice comparison software powered (typically) by deep neural networks.

- Automatic Speaker Recognition (ASR) – see Automatic Forensic Voice Comparison above.

- Forensic Speaker Identification – see Forensic Voice Comparison below.

- Forensic Voice Comparison (also known as Forensic Speaker Comparison, Forensic Speaker Recognition, and Forensic Speaker Identification) is a comparison of the voice evidence (the questioned-speaker recording) with known recordings in a criminal investigation using scientific methods to determine how much more likely we would observe the properties of this evidence if it was produced by the known speaker than if it was produced by any other speaker from a relevant population set.

- Likelihood Ratio is the ratio between the likelihood of the given evidence under the same-speaker hypothesis (which says that the compared recordings are produced by the same speaker) and the likelihood of the given evidence under the different-speaker hypothesis (which says that different speakers produced the compared recordings).

- Linguistics is the scientific study of language and speech.

- Log-Likelihood Ratio is a logarithm (usually decimal or natural) of a Likelihood Ratio.

- Population Set is a set of voice recordings from the Relevant Population.

- Relevant population is a set of speakers as close as possible to the suspect – from the same gender, race, nationality, age group, speaking the same language, having the same accent, etc.

- Spectrogram is a visual representation of a sound signal that shows the frequencies, the amplitude of the sound wave, and their dynamics over time.

- Voice Forensics is the field of forensic science related to analyzing voice recordings obtained during a criminal investigation.

Useful Forensic Voice Comparison Resources

- Find more detailed information about forensic voice comparison in the book Introduction to Forensic Voice Comparison by J. Morrison and E. Enzinger.

- Learn about the pros and cons of different methods of forensic voice comparison in the blog post by the former head of the Speaker Identification and Audio Analysis Department at the German Bundeskriminalamt Stefan Gfroerer.

- Read about voice biometry and its use in forensics in this PDF.

- Learn more about the instrument for multi-laboratory evaluation of any forensic voice comparison system Forensic_eval_01 in this special issue of the Speech Communication Journal.

- Watch a recording of the Phonexia webinar about Forensic Voice Comparison hosted in September 2022.